- We’re closing out our 2021 CIB reporting by sharing what we’ve learned over the last year and how we’ll be evolving our threat reporting in 2022.

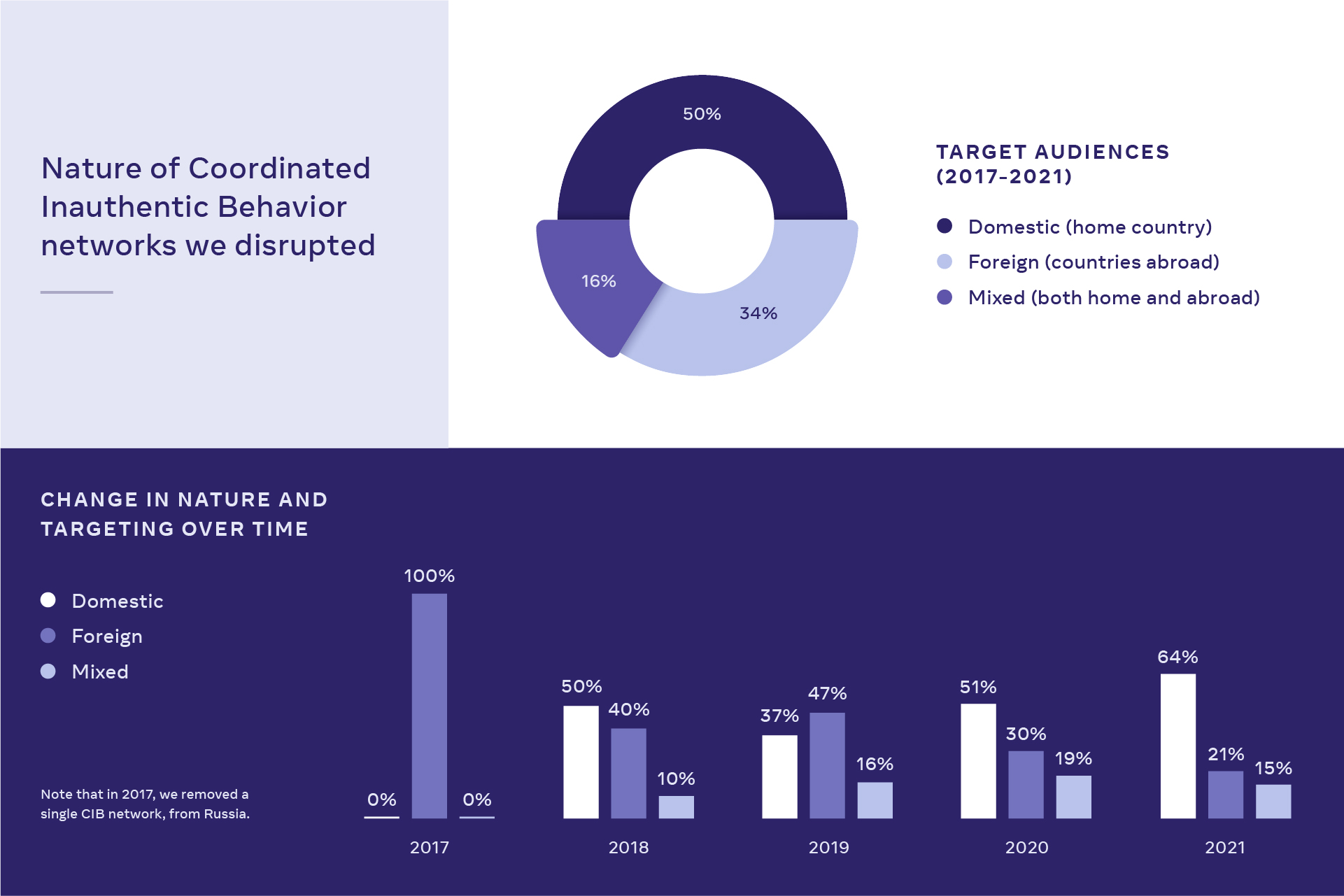

- In 2021, we removed 52 networks that originated in over 30 countries, where the majority of influence operations targeted domestic audiences in their own countries.

- We’re also sharing our detailed December 2021 CIB report.

Since 2017, we’ve reported on over 150 influence operations with details on each network takedown so that people know about the threats we see — whether they come from nation states, commercial firms or unattributed groups. Information sharing enabled our teams, investigative journalists, government officials and industry peers to better understand and expose internet-wide security risks, including ahead of critical elections.

In 2021, we removed 52 networks that engaged in coordinated efforts to manipulate or corrupt public debate for a strategic goal, while relying centrally on fake accounts to mislead people about who’s behind them. They came from 34 countries, including Latin America, the Asia-Pacific region, Europe, Middle East and Africa.

Providing Researchers With Analysis and Data

Since 2018, we’ve also shared information with independent researchers because we know that no single company can tackle these challenges alone. In 2021, we started publishing deep-dive internal research into particularly notable operations we investigate and disrupt to provide insights into new tactics and threat evolution (March, April, July, October, and November). Last month, we began expanding our beta research platform — with about 100 data sets — to more researchers worldwide studying influence operations. With this platform, we now provide access to raw data where researchers can visualize and assess these operations both quantitatively and qualitatively, in addition to sharing our own internal research and analysis.

Expanding Threat Reporting to New Areas

Since 2020, we’ve expanded our threat reporting to new areas including cyber espionage, financially-motivated inauthentic behavior, and coordinated adversarial networks targeting people with brigading, mass reporting and other harmful activities. We know that people abusing communication platforms online don’t strive to neatly fit our policies or only violate one at a time and are constantly changing their tactics to evade our enforcement. To help provide a more comprehensive view into how these threats evolve and how we counter them, we’ll continue to adapt and expand our reporting into new areas in 2022.

Finally, like our colleagues at Twitter, we’ve seen an evolution in the global threats that companies like ours face and a significant increase in safety risks to our employees around the world. When we believe these risks are high, we will prioritize enforcement and the safety of our teams over publishing our findings. While this change won’t impact the actions we take against deceptive operations we detect, it means that — in what we hope to be rare cases — we won’t be sharing all network disruptions publicly.

Summary of December 2021 Findings

Our teams continue to focus on finding and removing deceptive campaigns around the world — whether they are foreign or domestic. In December, we removed three networks: from Iran, Mexico and Turkey. We have shared information about our findings with industry partners, researchers and policymakers.

We know that influence operations will keep evolving in response to our enforcement, and new deceptive behaviors will emerge. We will continue to refine our enforcement and share our findings publicly. We are making progress rooting out this abuse, but as we’ve said before — it’s an ongoing effort and we’re committed to continually improving to stay ahead. That means building better technology, hiring more people and working closely with law enforcement, security experts and other companies. We also continue to call for updated internet regulations against cross-platform influence operations.

Here are the numbers related to the new CIB networks we removed in December:

- Total number of Facebook accounts removed: 61

- Total number of Instagram accounts removed: 151

- Total number of Pages removed: 305

- Total number of Groups removed: 3

CIB networks removed in December 2021:

- Iran: We removed eight Facebook accounts and 126 Instagram accounts from Iran that primarily targeted audiences in the UK, with a focus on Scotland. We found this activity as a result of our internal investigation into suspected coordinated inauthentic behavior in the region. Our investigation found links to individuals in Iran, including some with a background in teaching English as a foreign language.

- Mexico: We removed 12 Facebook accounts, 172 Pages and 11 accounts on Instagram. This network originated primarily in Mexico and targeted audiences in Honduras, Ecuador, El Salvador, the Dominican Republic and Mexico. We found this activity as a result of reviewing public reporting about some of this activity. Our investigation found links to Wish Win, a PR firm in Mexico.

- Turkey: We removed 41 Facebook accounts, 133 Pages, three Groups and 14 Instagram accounts. They originated primarily in Turkey and mainly targeted people in Libya. We found this network as part of our internal investigation into the suspected coordinated inauthentic behavior in Libya connected to prior reports of impersonation. We linked this activity to the Muslim Brotherhood’s affiliated Libyan Justice and Construction Party.

See the full report for more information.

Learn More About Coordinated Inauthentic Behavior

We view CIB as coordinated efforts to manipulate public debate for a strategic goal where fake accounts are central to the operation. There are two tiers of these activities that we work to stop: 1) coordinated inauthentic behavior in the context of domestic, non-government campaigns and 2) coordinated inauthentic behavior on behalf of a foreign or government actor.

Coordinated Inauthentic Behavior (CIB)

When we find campaigns that include groups of accounts and Pages seeking to mislead people about who they are and what they are doing while relying on fake accounts, we remove both inauthentic and authentic accounts, Pages and Groups directly involved in this activity.

Continuous Enforcement

We monitor for efforts to re-establish a presence on Facebook by networks we previously removed. Using both automated and manual detection, we continuously remove accounts and Pages connected to networks we took down in the past.