- Our goal is to provide people with the safest private messaging apps by helping protect people from abuse without weakening encryption.

- We’re sharing an update on our approach to safety on Messenger and Instagram DMs, which focuses on preventing abuse from happening, giving people controls to manage their experience and responding to potential harm effectively.

- Protecting people on our apps requires constant iteration, so we regularly review our policies and update our features, based on consultations with experts.

Our messaging apps help billions of people stay connected to those who matter most to them. When they connect, they expect their conversations to be private and secure, as most of us do.

We want people to have a trusted private space that’s safe and secure, which is why we’re taking our time to thoughtfully build and implement end-to-end encryption (E2EE) by default across Messenger and Instagram DMs. E2EE is designed to protect people’s private messages so that only the sender and recipient can access their messages. So if you’re sharing photos or banking details with family and friends, encryption allows that sensitive information to be shared safely.

And while most people use messaging services to connect with loved ones, a small minority use them to do tremendous harm. We have a responsibility to protect our users and that means setting a clear, thorough approach to safety. We also need to help protect people from abuse without weakening the protections that come with encryption. People should have confidence in their privacy while feeling in control to avoid unwanted interactions and respond to abuse. Privacy and safety go hand-in-hand, and our goal is to provide people with the safest private messaging apps.

While the complex build of default E2EE is underway, today we’re sharing an update on our approach to help keep people safe when messaging through Messenger or Instagram by:

- Working to prevent abuse from happening in the first place,

- Giving people more controls to help them stay safe and

- Responding to reports on potential harm.

Preventing Abuse

Preventing abuse from happening in the first place is the best way to keep people safe. In an end-to-end encrypted environment, we will use artificial intelligence to proactively detect accounts engaged in malicious patterns of behavior instead of scanning your private messages. Our machine learning technology will look across non-encrypted parts of our platforms — like account information and photos uploaded to public spaces — to detect suspicious activity and abuse.

For example, if an adult repeatedly sets up new profiles and tries to connect with minors they don’t know or messages a large number of strangers, we can intervene to take action, such as preventing them from interacting with minors. We can also default minors into private or “friends only” accounts. We’ve started to do this on Instagram and Facebook.

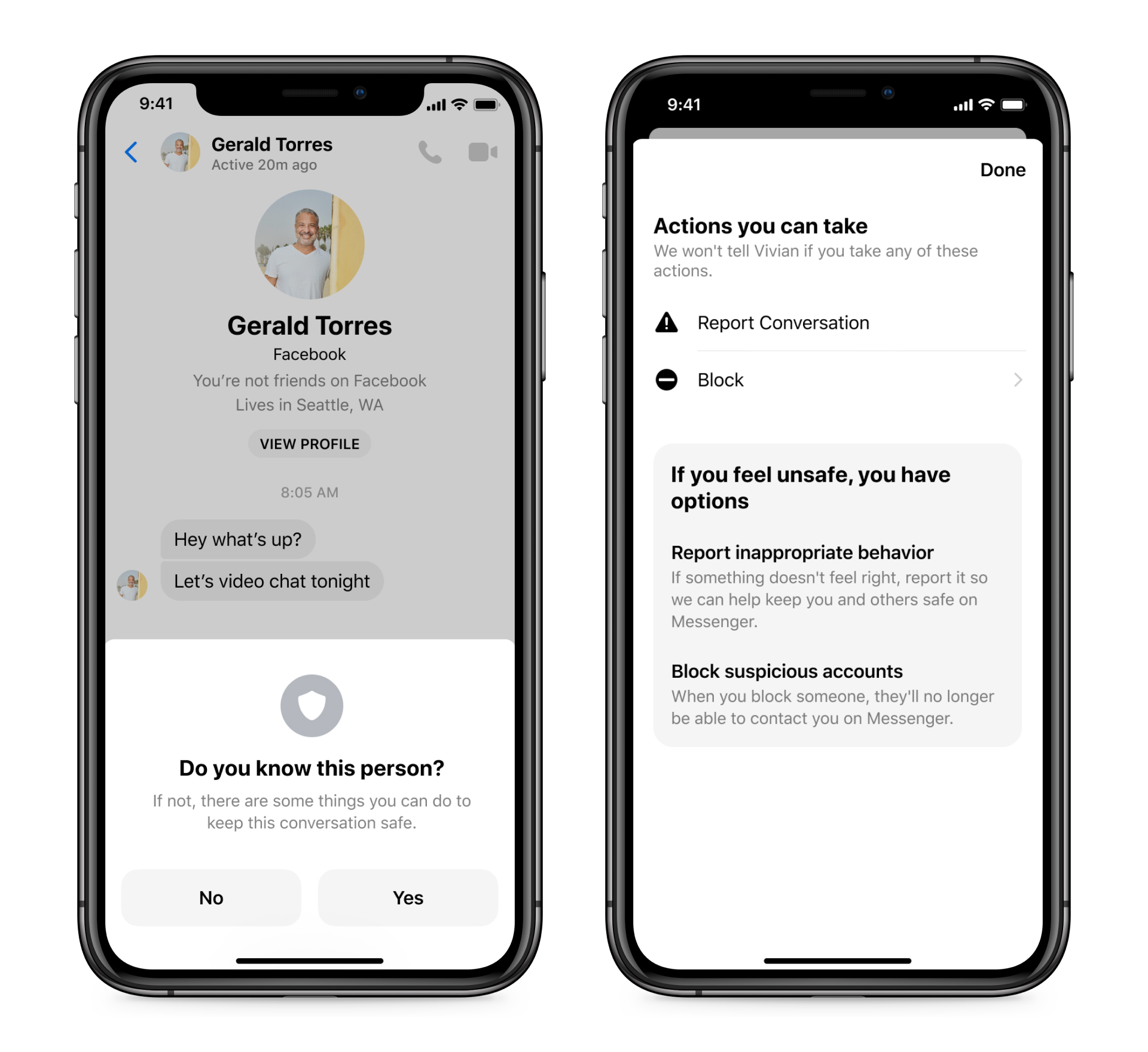

We also educate young people with in-app advice on avoiding unwanted interactions. We’ve seen tremendous success with our safety notices on Messenger, which are banners that provide tips on spotting suspicious activity and taking action to block, report or ignore/restrict someone when something doesn’t seem right. We developed these safety tips using machine learning to help people avoid scams, spot impersonations and, most urgently, flag suspicious adults attempting to connect to minors. In the past month alone, more than 100 million people have seen safety notice banners on Messenger. And, this feature works with end-to-end encryption.

Giving People More Choice and Control

In addition to our efforts to prevent harm, we are giving users more controls of their messaging inbox to account for the variety of experiences people want. Emerging creators often want increased reach, while other people want tight-knit circles. For example, we recently announced Hidden Words on Instagram so people can determine for themselves what offensive words, phrases and emojis they want to filter into a Hidden Folder. This is also part of our effort to take a broader approach to safety. We filter a list of potentially offensive words, hashtags and emojis by default, even if they don’t break our rules.

Over the past few years, we’ve improved the options for reviewing chat requests and recently built delivery controls that let people choose who can message their Chats list, who goes to their requests folder and who can’t contact them at all. To help people review these requests in the safest way possible, we blur images and videos, block links and let people delete requests to chat in bulk. (Note: some features may not be available to everyone.)

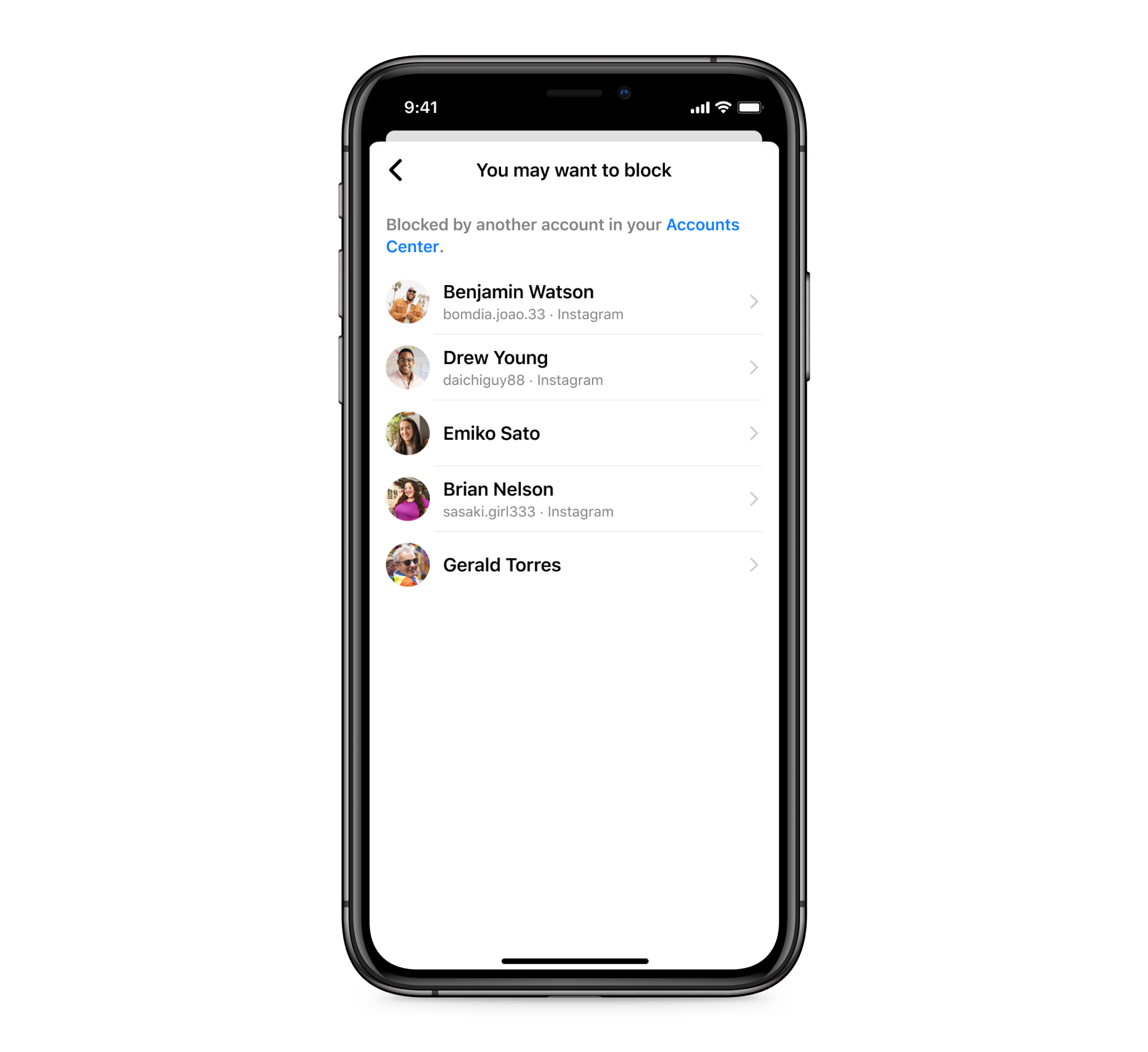

People can already block unwanted contacts in Messenger, so we’re introducing the ability to block unwanted contacts seamlessly across Instagram DMs and Messenger. We are making it easy to block contacts from strangers, a feature that is switched on by default for any user we identify as a potential minor.

Responding to Potential Harm

Reporting is an essential tool for people to stay safe and help us respond to abuse effectively. We’re making it much easier to report harm and educating people on how to spot scammers and impersonators by redesigning our reporting feature to be more prominent in Messenger. We also recently made it easier to report content for violating our child exploitation policies. People can select “involves a child” as an option when reporting harm, which, in addition to other factors, prioritizes the report for review and action. Our goal is to encourage significantly more reporting by making it more accessible, especially among young people. As a result, we’re seeing close to 50% year-over-year growth in reporting, and we’re taking action to keep Messenger and Instagram DMs safe.

We’ll continue to enforce our Community Standards on Messenger and Instagram DMs with end-to-end encryption. Reporting decrypts portions of the conversation that were previously encrypted and unavailable to us so that we can take immediate action if violations are detected — whether it’s scams, bullying, harassment or violent crimes. In child exploitation cases, we’ll continue to report these accounts to NCMEC. Whether the violation is found on or through non-encrypted parts of our platform or through user reports, we’re able to share data like account information, account activity and inbox content from user reported messages for compliance with our Terms of Service and Community Standards.

We also want to educate more people to act if they see something and avoid sharing harmful content, even in outrage. We have begun sending alerts informing people about the harm that sharing child exploitation content, even in outrage, can cause by warning them that it’s against our policies and will have legal consequences. We’ll continue to share these alerts in an end-to-end encrypted environment, in addition to reporting this content to NCMEC. We’ve also launched a global “Report it, Don’t Share it” campaign reminding people of the harm caused by sharing this content and the importance of reporting this content.

Even in the context of encrypted systems, there is additional data we can provide to law enforcement to investigate when requested, such as who users contact, where they were when they sent a message and when they sent it.

Evolving Approach with New Technologies and Understanding

Preventing abuse on our apps requires constant iteration, so we regularly review our policies and features, listen to feedback from experts and people using our apps to stay ahead of people who may not have the best intentions.

While building a trusted space requires ongoing innovation, flexibility and creativity, we believe that this approach of prevention, control and response offers a framework to get people the protection they need and deserve. Privacy and safety go hand-in-hand, and we’re committed to making sure they are integral to people’s messaging experiences.