Imagine creating a digital painting without ever picking up a paintbrush or instantly generating storybook illustrations to accompany the words. Today, we’re showcasing an exploratory artificial intelligence (AI) research concept called Make-A-Scene that will allow people to bring their visions to life.

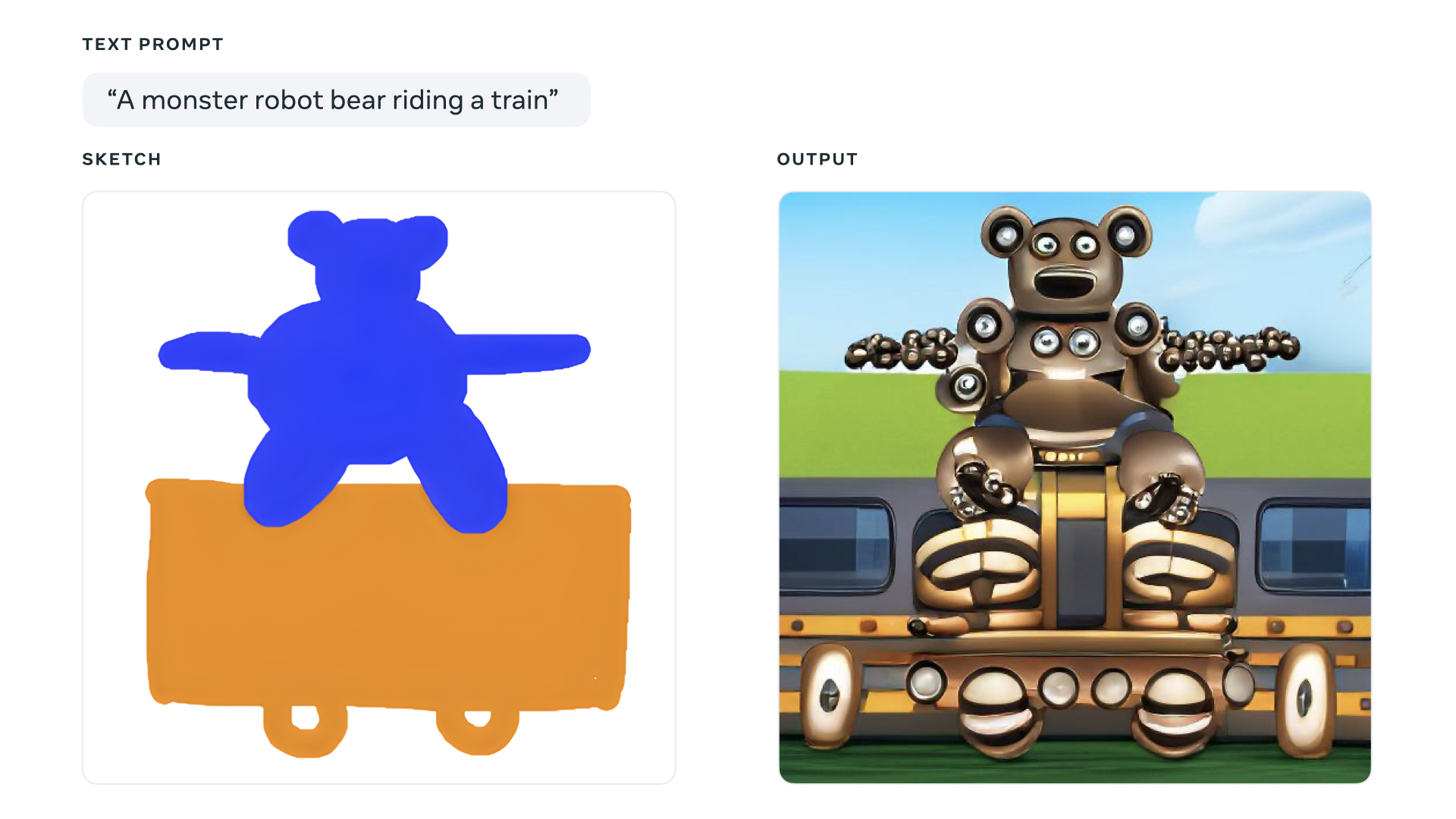

Make-A-Scene empowers people to create images using text prompts and freeform sketches. Prior image-generating AI systems typically used text descriptions as input, but the results could be difficult to predict. For example, the text input “a painting of a zebra riding a bike” might not reflect exactly what you imagined; the bicycle might be facing sideways, or the zebra could be too large or small.

With Make-A-Scene, this is no longer the case. It demonstrates how people can use both text and simple drawings to convey their visions with greater specificity using a variety of elements.

Make-A-Scene captures the scene layout to enable nuanced sketches as input. It can also generate its own layout with text-only prompts, if that’s what the creator chooses. The model focuses on learning key aspects of the imagery that are more likely to be important to the creator, like objects or animals.

Empowering Creativity for Artists and Non-Artists Alike

As part of our research and development process, we shared access to our Make-A-Scene demo with AI artists including Sofia Crespo, Scott Eaton, Alexander Reben and Refik Anadol.

Crespo, a generative artist focusing on the intersection of nature and technology, used Make-A-Scene to create new hybrid creatures. Using its freeform drawing capabilities, she found that she could begin to create quickly across new ideas.

“As a visual artist, you sometimes just want to be able to create a base composition by hand, to draw a story for the eye to follow, and this allows for just that.” — Sofia Crespo, AI artist

Make-A-Scene isn’t just for artists — we believe it could help everyone better express themselves. Andy Boyatzis, a program manager at Meta, used Make-A-Scene to generate art with his children, who are two and four years old. They used playful drawings to bring their ideas and imagination to life.

“If they wanted to draw something, I just said, ‘What if…?’ and that led them to creating wild things, like a blue giraffe and a rainbow plane. It just shows the limitlessness of what they could dream up.” — Andy Boyatzis, Program Manager, Meta

Building the Next Generation of Creative AI Tools

It’s not enough for an AI system to just generate content. To realize AI’s potential to push creative expression forward, people should be able to shape and control the content a system generates. It should be intuitive and easy to use so people can leverage whatever modes of expression work best for them, whether speech, text, gestures, eye movements or even sketches to bring their vision to life.

Through projects like Make-A-Scene, we’re continuing to explore how AI can expand creative expression. We’re making progress in this space, but this is just the beginning. We’ll continue to push the boundaries of what’s possible using this new class of generative creative tools to build methods for more expressive messaging in 2D, mixed reality and virtual worlds.