Today, we’re announcing a long-term AI research initiative to better understand how the human brain processes speech and text. In collaboration with neuroimaging center Neurospin (CEA) and Inria, we’re comparing how AI language models and the brain respond to the same spoken or written sentences. We’ll use insights from this work to guide the development of AI that processes speech and text as efficiently as people. Over the past two years, we’ve applied deep learning techniques to public neuroimaging data sets to analyze how the brain processes words and sentences.

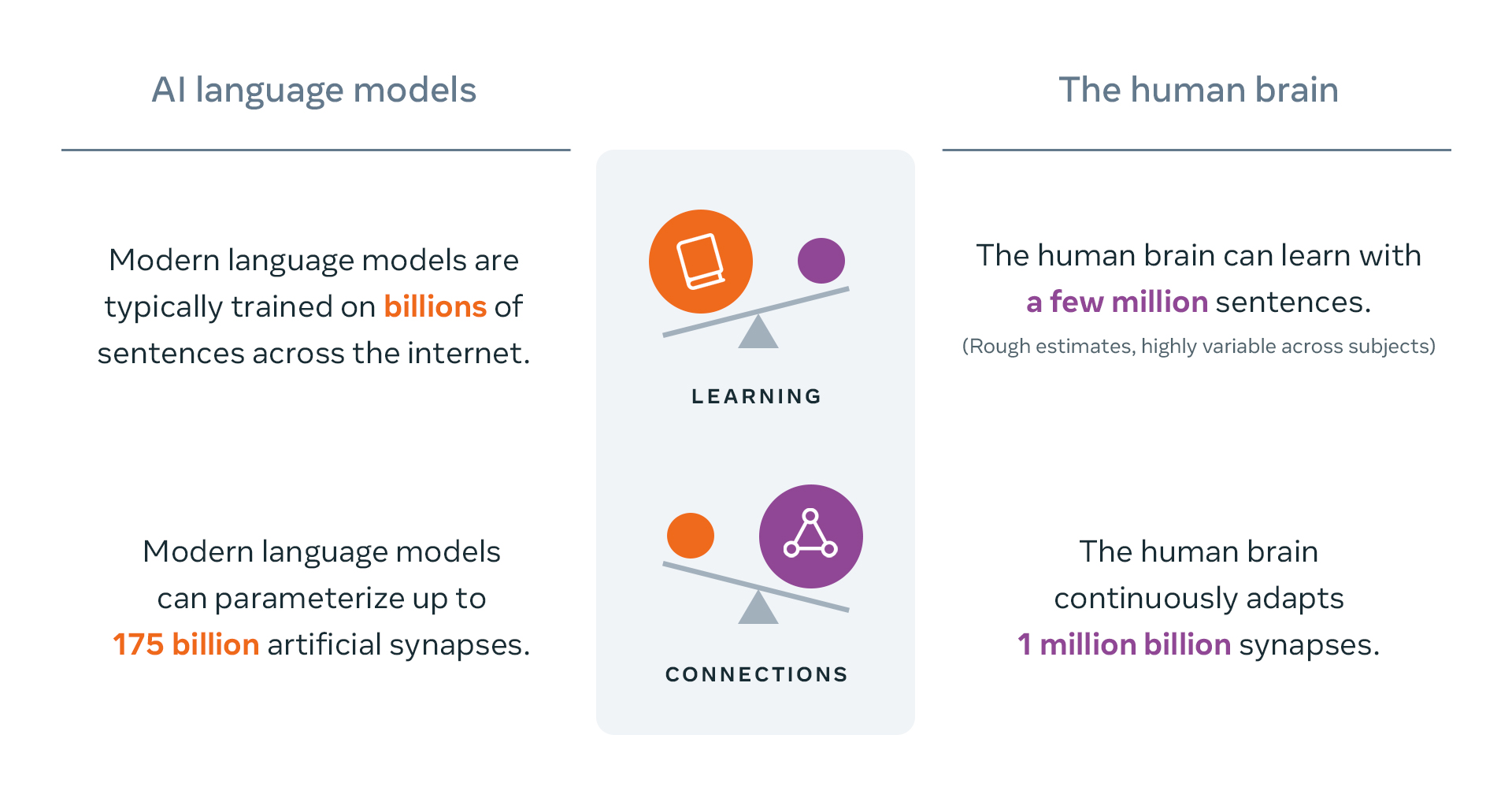

AI has made impressive strides in recent years, but it’s still far from learning language as efficiently as humans. For instance, children learn that “orange” can refer to both a fruit and color from a few examples, but modern AI systems can’t do this as efficiently as people. This has led many researchers to wonder if studying the human brain can help to build AI systems that can learn and reason like people.

Our research has found:

Using Deep Learning to Analyze Complex Brain Signals

Neuroscientists have historically faced major limitations in analyzing brain signals. Studying neuronal activity and brain imaging is a complicated process, requiring heavy machinery to analyze neuronal activity, which is often opaque and noisy. The rise of deep learning, where multiple layers of neural networks work together to learn, is rapidly alleviating these issues. This approach highlights where and when words and sentences are generated in the brain when a person reads or listens to a story.

To meet the quantity of data required for deep learning, our team not only models thousands of brain scans recorded from public data sets using functional magnetic resonance imaging (fMRI), but also simultaneously models them using magnetoencephalography (MEG), a scanner that takes snapshots of brain activity every millisecond. In combination, these neuroimaging devices provide the data necessary to detect where and in what order the activations take place in the brain.

Predicting Far Beyond the Next Word

We recently revealed evidence of long-range predictions in the brain, an ability that still challenges today’s language models. For example, consider the phrase, “Once upon a …” Most language models today would typically predict the next word, “time,” but they’re still limited in their ability to anticipate complex ideas, plots and narratives, like people do.

In collaboration with Inria, we compared a variety of language models with the brain responses of 345 volunteers who listened to complex narratives while being recorded with fMRI. We enhanced those models with long-range predictions to track forecasts in the brain. Our results showed that specific brain regions are best accounted for by language models enhanced with far-off words in the future. These results shed light on the computational organization of the human brain and its inherently predictive nature, paving the way toward improving current AI models.

Learn more about our research on how the human brain processes language.